David Gough, Chris Maidment and Jonathan Sharples

This blog post is based on the Evidence & Policy article, ‘Enabling knowledge brokerage intermediaries to be evidence-informed.’

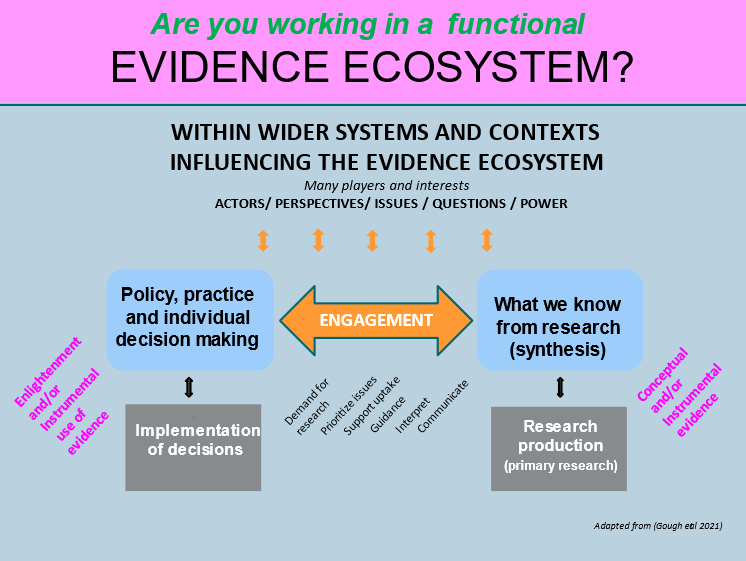

Research evidence can be useful (alongside lots of other information) in informing policy, practice and personal decision making. But does this always happen? It tends to be assumed that if research is available and relevant then it will be used in an effective self-correcting ‘evidence ecosystem’, but in many cases the ‘evidence ecosystem’ may be dysfunctional or not functioning at all. Potential users may not demand relevant evidence, not be aware of the existence of relevant research, or may misunderstand it use and relevance.

Knowledge brokerage intermediary (KBIs) agencies (such as knowledge clearinghouses and What Works Centre) aim to improve this by enabling the engagement between research use and research production. We believe that KBIs are essential innovations for improving research use. In this blog, we suggest four ways that they might be further developed by having a more overt focus on the extent that they themselves are evidence informed in their work, as we explore in our Evidence & Policy article.

Continue reading